Naive Bayes

The key assumption made in Naive Bayes is that features are conditionally independent given the class label. Mathematically this yields,

$$p(x\mid y=c) = \prod_{j=1}^D p(x_j\mid y=c)$$

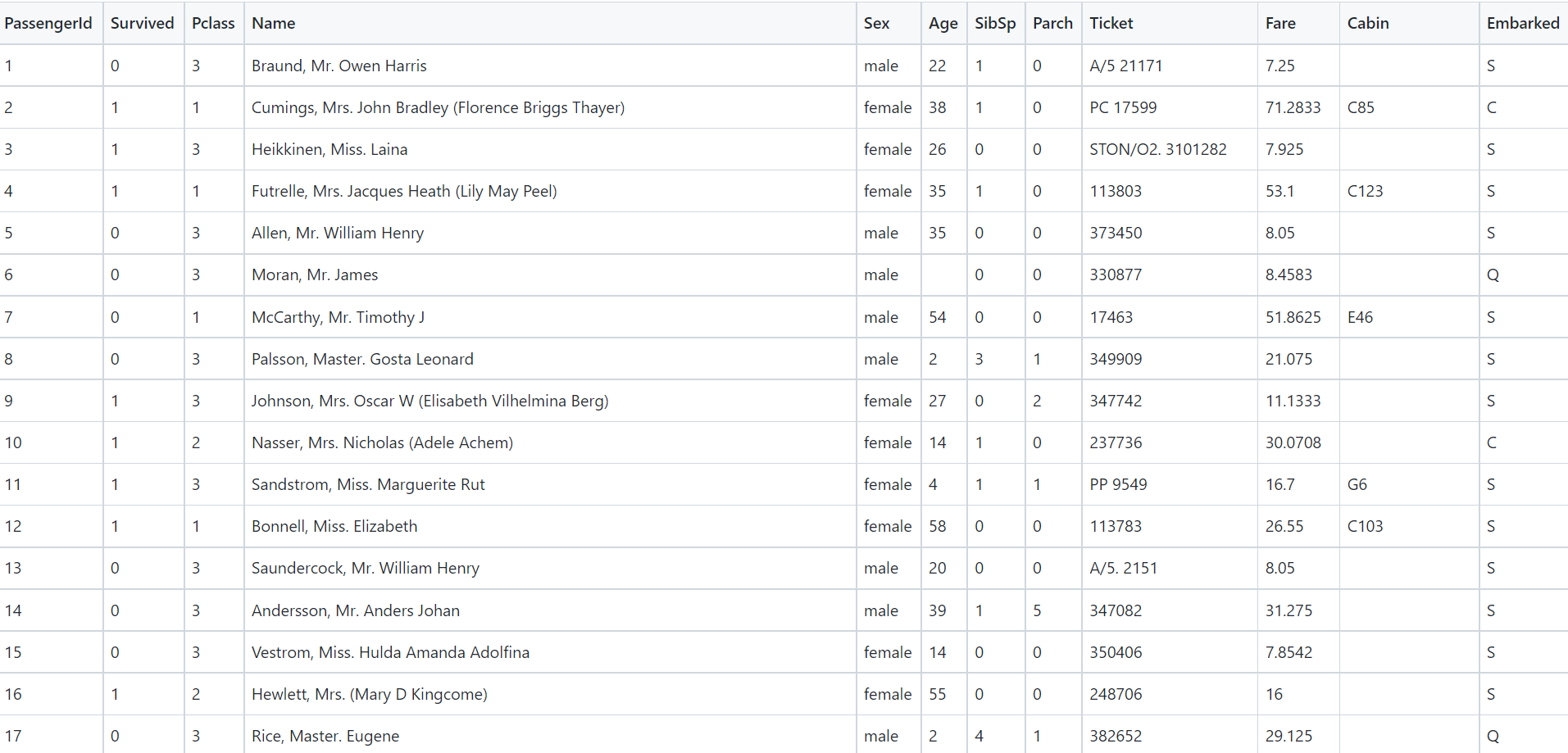

For our Titanic example, the goal is to predict whether passenger \(i\) will survive or not given features Pclass, Name, Sex, etc. Naive Bayes will use the dataset to learn a model which intakes a passenger’s set of features and predicts whether he or she will survive on the Titanic. Therefore, according to Bayes' Theorem, the goal is to compute

$$p(y=c\mid x, D) \propto p(y=c\mid D) \prod_{j=1}^D p(x_j\mid y=c, D)$$

and choose the class with the highest probability. Namely, to get the probability of a male passenger in class 1 surviving we compute,

$$p(y=1\mid x, D) \propto p(y=1\mid D) p(Sex=Male \mid y=1, D)p(Pclass=1\mid y=1, D)$$

If we use MLE to train our model, \(p(y=1\mid D)\) is simply the fraction of passengers in the dataset that survived, \(p(Sex=Male \mid y=1, D)\) is the fraction of survivors who were male and \(p(Pclass=1\mid y=1, D)\) the fraction of survivors in class 1. We can also train the model using MAP or by computing the full posterior in a Bayesian setting.

The key assumption made in Naive Bayes is that features are conditionally independent given the class label. Mathematically this yields,

$$p(x\mid y=c) = \prod_{j=1}^D p(x_j\mid y=c)$$

For our Titanic example, the goal is to predict whether passenger \(i\) will survive or not given features Pclass, Name, Sex, etc. Naive Bayes will use the dataset to learn a model which intakes a passenger’s set of features and predicts whether he or she will survive on the Titanic. Therefore, according to Bayes' Theorem, the goal is to compute

$$p(y=c\mid x, D) \propto p(y=c\mid D) \prod_{j=1}^D p(x_j\mid y=c, D)$$

and choose the class with the highest probability. Namely, to get the probability of a male passenger in class 1 surviving we compute,

$$p(y=1\mid x, D) \propto p(y=1\mid D) p(Sex=Male \mid y=1, D)p(Pclass=1\mid y=1, D)$$

If we use MLE to train our model, \(p(y=1\mid D)\) is simply the fraction of passengers in the dataset that survived, \(p(Sex=Male \mid y=1, D)\) is the fraction of survivors who were male and \(p(Pclass=1\mid y=1, D)\) the fraction of survivors in class 1. We can also train the model using MAP or by computing the full posterior in a Bayesian setting.

To close, let's discuss some advantages of using Naive Bayes. Since Naive Bayes assumes conditional independence among features, it bypasses the curse of dimensionality. This means that it requires a relatively smaller amount of data to train and is extremely fast. Moreover, it has proven to work well in document classification and spam filtering